When Jetpack Compose was in beta, I wanted to build something small just to play around with it -- since my team was still using XML layouts. Since Compose is Android's new UI framework, my mind turned towards what sort of relatively complex UI might benefit from an advanced toolset, and I thought of sheet music. Laying out a musical score would be a considerable challenge and require building a number of custom components all working together.

In fact, I deemed the scope of such a project infeasible for the time I had available, and decided to simplify. What I ended up building was simply a grid of notes where the x axis represents time and the y axis pitch -- an app I simply called NoteGrid. This was still a valuable exercise, with the potential to be expanded into a proper music editing tool. I've updated the code to support MIDI playback and file output, for example, something I’ll go into more detail about in my next post.

In this post, however, I'd like to cover the creation of NoteGrid's UI as well as how the application handles state.

This will serve as something of an overview of Compose's core features. I won't go into so much detail that I'm duplicating the developer guide. In particular, the article on Thinking in Compose introduces the core concepts that will be put into practice in the rest of this post. If you're serious about building applications with Compose, the entire developer guide is a must-read.

If you're new to Android development and/or would like an overview of the app's initial setup, which gets us to the point where we can start building our UI, my previous post goes through all that, including Hilt integration.

Here’s a short clip of the app in action:

Here's what we'll be covering in the rest of this post:

Building a grid of custom components representing notes

Interacting with the grid to toggle notes on and off

Animating notes during playback

Sending events to the ViewModel

Fixing a performance issue

Making the grid responsive

Creating standard UI controls

Hoisting state

Building a grid

Compose is a declarative UI framework where the UI is described by Composable functions. One advantage of laying out an app in this way, rather than using XML, is that we can use the full power of the programming language used to write these functions -- in this case, Kotlin. That means that when we want to build a custom UI component displaying a grid, we can use for loops to place our elements in a layout of rows and columns, like this:

Column {

for (y in 0 until yCount) {

Row {

for (x in 0 until xCount) {

Note()

}

}

}

}Column and Row are composable functions that come from the Compose SDK and are responsible for the layout of their children, while Note() is a composable function that I've defined myself in the application to use as a custom UI component. Read more about standard layouts here.

Interacting with the grid

The idea behind NoteGrid is that any particular note in the grid can be tapped in order to enable that note so that it plays when the composition is played. To make this work, we pass the Note() function two parameters -- a boolean representing its current state, and a function reference the Note can call when it's toggled. We use both of these parameters within the toggleable modifier, which takes care of handling click events for us. Modifiers are a powerful part of the Compose SDK that can decorate or add functionality to any UI component.

@Composable

fun Note(

isEnabled: Boolean,

onValueChange: (Boolean) -> Unit

) {

val color = when {

isEnabled -> { Color.Red }

else -> { Color.Gray }

}

Surface(

Modifier

.size(20.dp)

.toggleable(

value = isEnabled,

enabled = true,

role = Role.Switch,

onValueChange = onValueChange

),

color = color

) { }

}One of the most important concepts in Compose is that composable functions like Note() are only recomposed -- that is, run again and re-drawn -- when their input parameters change. With the code above, any time the screen is recomposed, each Note that's part of the screen should only be re-drawn if the value passed to that instance's isEnabled parameter changes, resulting in the Note's Surface color changing. We'll be keeping the lambda we pass in for onValueChange constant, so it won't affect recomposition.

Now when we call Note(), we need to pass in these parameters. In order to keep track of isEnabled for each Note in the grid, I've defined a two-dimensional array of booleans called noteMatrix outside the Column/Grid layout and for loops of the grid:

val noteMatrix by rememberSaveable {

mutableStateOf(

Array(xCount) {

Array(yCount) { false }

}

)

}rememberSaveable and mutableStateOf are important Compose SDK functions that allow us to retain state across compositions, recomposing when it's modified.

With that defined, we can pass a Note values for isEnabled and onValueChange like this, using Kotlin's "trailing lambda" syntax for onValueChange:

val isEnabled = noteMatrix[x][y]

Note(isEnabled) {

noteMatrix.value = noteMatrix.clone().apply {

this[x][y] = it

}

}When a Note is toggled, we re-create noteMatrix with the new value, causing the NoteGrid to be recomposed.

Animating the grid during playback

Adding a Play / Stop button to our screen is simple enough:

var playing by remember { mutableStateOf(false) }

Button(onClick = {

playing = !playing

}) {

Text(if (playing) "Stop" else "Play")

}Compose also appears to have robust support for animation. It doesn’t take much code to animate a time value while the composition is playing, and set it back to zero when it's stopped:

val time = remember { Animatable(0f) }

LaunchedEffect(playing) {

if (playing) {

time.animateTo(

100f,

animationSpec = infiniteRepeatable(

tween(durationMillis, easing = LinearEasing)

)

)

} else {

time.animateTo(0f, snap())

}

}We use two new Compose APIs here:

This runs the block of code passed to it in a coroutine so that the animation runs asynchronously.

We pass our

playingvariable to LaunchedEffect as a key so that whenplayingis toggled the effect will run again.

Animatable: A value holder that animates its value when

animateTo()is called.

With that in place, we can calculate whether each note is currently playing:

val isEnabled = noteMatrix[x][y]

val startTime = durationAsPercentage * x

val endTime = startTime + durationAsPercentage

val isPlaying = isEnabled.and(time.value > startTime && time.value < endTime)

Note(isEnabled, isPlaying) { … }Now our Note can use this state when determining its colour:

val color = when {

isPlaying -> { Color.Green }

isEnabled -> { Color.Red }

else -> { Color.Gray }

}Playing sound

I did something that's not really best practice here, which is that I used the isPlaying value to trigger audio as well. I’ll into actually playing audio in my next post, but all Note() does is call two new functions, playSound() and stopSound(). These are implemented in the ViewModel, which then calls a playback controller -- but the Note doesn't need to know that. It just knows to call the right function at the right time and pass along its scaleDegree.

fun Note(

scaleDegree: Int,

isPlaying: Boolean,

isEnabled: Boolean,

playSound: (Int) -> Unit,

stopSound: (Int) -> Unit,

onValueChange: (Boolean) -> Unit

) {

…

if (isPlaying) {

playSound(scaleDegree)

} else {

stopSound(scaleDegree)

}

}In NoteGridScreen, I passed these to Note using references to the corresponding ViewModel functions:

Note(

scaleDegree,

isPlaying,

isEnabled,

viewModel::playSound,

viewModel::stopSound

) {

noteMatrix.value = noteMatrix.value.clone().apply {

this[x][y] = it

}

}If I expand this proof-of-concept app into a full sequencer, I won't be able to rely on the recomposition of Note components to trigger audio. It's a side effect, even if audio is output to the user in much the same way as visual info, because Compose is primarily concerned with the visual aspect of the UI. This becomes clear when I consider that I'll still need notes to play at the correct time even if they're scrolled offscreen or in a minimized view, in which case I'd rather not recompose them.

Perhaps predictably, doing things the way shown above does actually lead to a bug.

Fixing a performance issue

There's a recomposition issue with this implementation. I discovered this when I implemented MIDI audio playback and had to tell notes to stop as well as when to start (the initial SoundPool implementation just started short clips that played a single note before ending). For some reason, notes were stopping immediately after they started playing. Sometimes I wouldn't hear anything when I started playback, and sometimes would hear brief blips of sound.

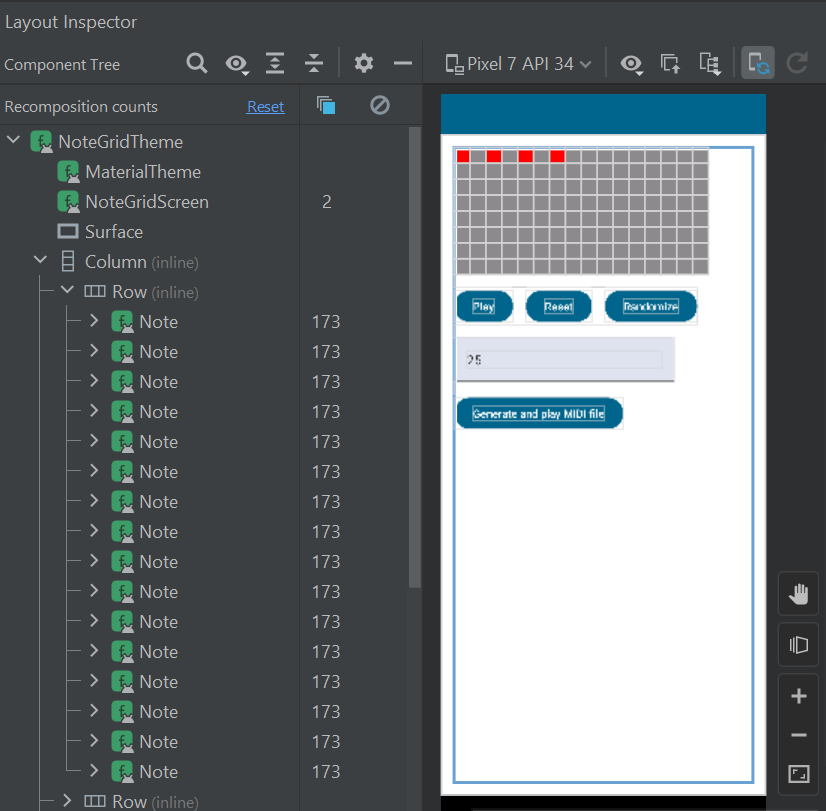

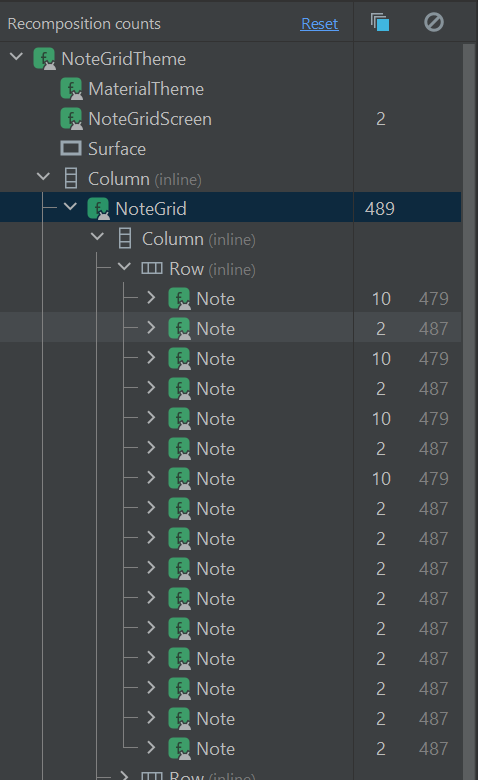

In the debugger, I set a breakpoint on the line of Note that calls stopSound(scaleDegree) and saw that it was being called for every note in the grid. Opening up the Layout Inspector confirmed that every Note was being recomposed every frame during playback, not just the enabled ones, and not only when a note was enabled / disabled or started / stopped:

When the notes that weren't playing were recomposed, they called stopSound(scaleDegree) with the same scaleDegree as the note that was just started, and this was causing the audio to cut out.

I wasn't sure what was causing these extra recompositions, but wondered if it could be due to the calculations being performed each frame that depended on an animatable time value, or whether referring to noteMatrix in the Note's trailing lambda was the cause. It took a while for me to learn it was actually all to do with the references to ViewModel functions.

What happened next was one of those situations where I fixed the bug without really understanding how I'd fixed it. At this point, I hadn't yet separated the grid code from the screen. I reasoned that before I dug myself too deep into trying to resolve this problem, I should refactor NoteGrid into its own composable function. That would make it easier to test small changes in isolation.

So I did that and… just like that, the problem was gone!

NoteGrid is being recomposed, but now the Notes are being skipped most of the time. Just one problem was left, which was that I still didn't understand why this had fixed the issue.

This StackOverflow thread looks very close to what's happening. In response, a Compose developer (Ben Trengrove) explains:

The Compose compiler does not automatically memoize lambdas that capture unstable types. Your ViewModel is an unstable class and as such capturing it by using it inside the onClick lambda means your onClick will be recreated on every recomposition.

Because the lambda is being recreated, the inputs to CCompose are not equal across recompositions, therefore CCompose is recomposed.

Then he suggests two solutions:

If you want to workaround this behaviour, you can memoize the lambda yourself. This could be done by remembering it in composition e.g.

val cComposeOnClick = remember(viewModel) { { viewModel.increaseCount() } }

CCompose(onClick = cComposeOnClick)or change your ViewModel to have a lambda rather than a function for increaseCount.

class MyViewModel {

val increaseCount = { ... }

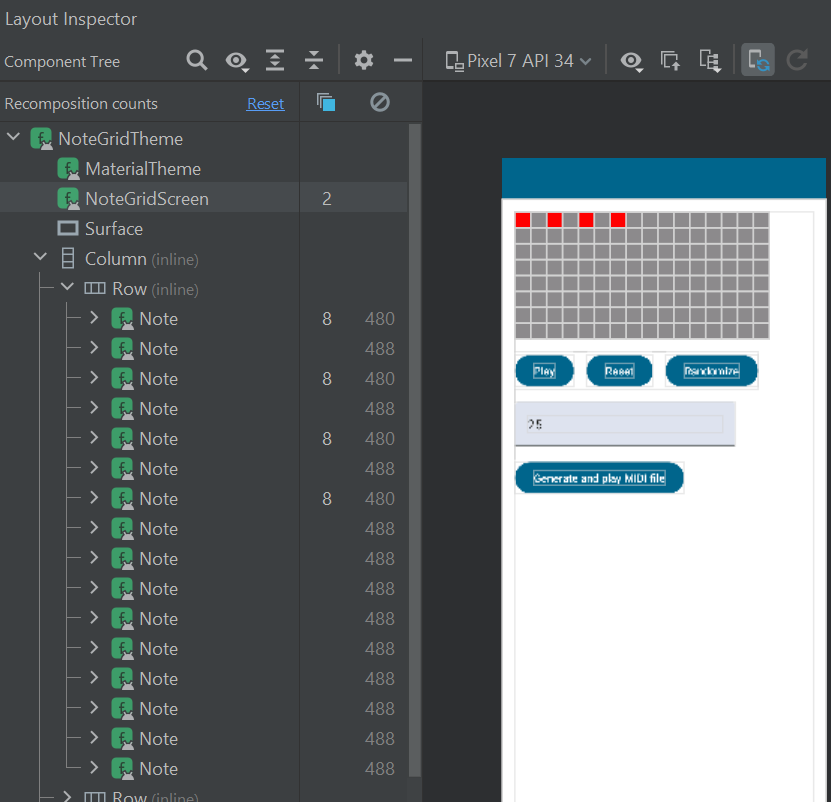

}When I put the NoteGrid code back into NoteGridScreen directly, and then tried each of those techniques, I had even better results in the Layout Inspector:

For each of these tests, I reset the layout inspector's recomposition counts, then played the same bar of 4 notes 4 times, then clicked Stop. With the final fix, I see a nice clean 8 recompositions for each of the enabled notes -- once for each time the note started and stopped. Without that fix, but with NoteGrid in its own composable, I end up with 10 recompositions for the enabled notes and 2 for the rest -- the extra recompositions happen when I start and stop playback. Those few extra recompositions may not be a big deal when compared to the hundreds per note that I was seeing, but it's nice to have confirmation of the root cause, which is that referencing the ViewModel -- even only to refer to one of its functions -- can cause unnecessary recompositions. This is because ViewModels are generally going to be unstable. Stability is a concept that determines whether or not composables can be skipped at runtime.

So why did simply moving the code into a new composable do anything? I ran compiler reports on the commits before and after my small refactor, which told me that both Note and NoteGrid were considered restartable as well as skippable. My best theory is that by being a restartable node in the tree (that is, a place where Compose can restart composition), NoteGrid reuses the values of playSound and stopSound originally passed to it.

I'm not entirely certain of that, but while I could go further with debugging this by setting more breakpoints or running a system trace, at this point I've read so many warnings about premature optimization from the Compose devs that I'm just going to be happy the bug is fixed and I have a decent idea of the root cause. I'll be putting the remember(viewModel) and callback-as-lambda approaches to dealing with this in my toolbox.

Making the grid responsive

One topic I haven't yet touched on is accessibility. Compose builds in a lot of sensible default behaviour, especially as long as you use the built-in Material Components, but a custom component like a MIDI track editor is going to require careful testing and attention to detail when it comes to navigation, focus order, descriptive text, and custom actions. That's a whole topic in itself, but even before that there's a glaring issue with this early implementation which is simply that the notes are too small to aim at reliably with a finger.

Google's accessibility standards state that "Any on-screen element that someone can click, touch, or interact with should be large enough for reliable interaction." They say the minimum size of anything clickable should be 48x48dp.

Changing the size of each Note is easy enough, but then they go off-screen on phones in portrait orientation. Making the grid scrollable is also easy, but with it permanently too large to all fit on screen at once it's impossible to see the "big picture" of the full composition. The obvious solution to this is to have a zoom button, and that also wasn't too tough to add. We can even animate it easily:

val configuration = LocalConfiguration.current

val screenWidth = configuration.screenWidthDp.dp

var noteSize by remember { mutableStateOf(48.dp) }

noteSize = if (!zoomedIn || screenWidth / xCount > 48.dp)

screenWidth / xCount

else

48.dp

val animatedNoteSize by animateDpAsState(

targetValue = noteSize,

label = "noteSize"

)And toggle the zoomedIn variable with an IconButton:

val configuration = LocalConfiguration.current

val screenWidth = configuration.screenWidthDp.dp

val canZoom = screenWidth / xCount < 48.dp

if (canZoom) {

Box(Modifier.fillMaxWidth()) {

IconButton(

onClick = { zoomedIn = !zoomedIn },

modifier = Modifier.align(Alignment.CenterEnd),

) {

Icon(

if (zoomedIn) Icons.Default.KeyboardArrowUp

else Icons.Default.KeyboardArrowDown,

contentDescription = if (zoomedIn) "Zoom out" else "Zoom in"

)

}

}

}A tiny bit of math using the available screen width lets us hide the button on devices like tablets where it's not needed.

Creating standard UI controls

The rest of our UI is made up of pretty standard Material components like Switch, Slider, Button, and TextField. For example, here's the code that sets up a Switch to control whether playback is performed with MIDI or SoundPool:

Row {

Switch(

checked = useMidi ?: false,

onCheckedChange = { checked -> viewModel.setUseMidi(checked) }

)

Spacer(Modifier.width(16.dp))

Text(

modifier = Modifier.align(Alignment.CenterVertically),

text = if (useMidi == true) "Playing with MIDI"

else "Playing with SoundPool"

)

}Looking at this again, there's another reference to the ViewModel within a lambda here that I might want to investigate and see if it's triggering extra recompositions -- but this works just fine.

The most complicated of the remaining controls is the InstrumentSelector, which I pulled out into its own composable function since it takes up around 50 lines of code on its own. This is because the DropdownMenu component still requires us to describe both its contents in the form of DropdownMenuItems, and the UI that triggers it to expand. This makes it quite flexible, but I went for a pretty standard look. Here's the DropdownMenu itself:

val state = rememberLazyListState()

DropdownMenu(

expanded = expanded,

onDismissRequest = { expanded = false }

) {

// Explicit size is needed for LazyColumn to work within DropdownMenu

// Perhaps we could calculate the width though

Box(modifier = Modifier.size(width = 200.dp, height = 300.dp)) {

LazyColumn(Modifier, state) {

items(midiController.getAvailableInstruments()) { instrument ->

DropdownMenuItem(

text = { Text(instrument) },

onClick = {

midiController.selectInstrument(instrument)

expanded = false

}

)

}

}

}

}The docs say: "Note that the content is placed inside a scrollable Column, so using a LazyColumn as the root layout inside content is unsupported." However, with some soundfonts having 100+ instruments, I looked and found a bit of a hacky solution to the terribly janky scroll behaviour I was getting out of DropdownMenu, which the comment above draws attention to. Wrapping the menu items in a Box with a fixed size did the trick. That way, LazyColumn is no longer the root layout but a child of the Box.

It just goes to show that the devil's in the details, and that even seemingly straightforward requirements often hide tricky complications. However, for the most part implementing the remaining NoteGrid UI was just a matter of looking up the appropriate Material component and following the documentation and sample code like a recipe.

Here’s the InstrumentSelector in action:

A fun feature is the "Randomize" button, which randomly populates the grid with notes. The number in the field next to the button controls approximately how many notes are added. This is a very basic form of procedurally generated music, which would be an interesting area to explore more thoroughly. However, for now we have more practical matters to consider.

Button(

modifier = Modifier.align(Alignment.CenterVertically),

onClick = {

noteMatrix.value = Array(xCount) {

Array(yCount) { Random.nextInt(100) < randomizationDensity }

}

playing = false

}

) {

Text("Randomize")

}Hoisting state

As we refactor a Compose screen into reusable components like NoteGrid and InstrumentSelector, it becomes apparent that some state needs to be visible to more than one component. For example, the NoteGrid needs to access and modify our noteMatrix, but so does the Reset button that clears the grid.

Whenever we encounter a situation like this, Google recommends the practice of hoisting that shared state to the nearest common ancestor. Child nodes in the layout then receive the state as an input parameter. For this reason, much of the state of the app is declared at the top of NoteGridScreen:

@Composable

fun NoteGridScreen(viewModel: NoteGridViewModel) {

var playing by remember { mutableStateOf(false) }

var randomizationDensity by rememberSaveable { mutableIntStateOf(15) }

var durationMillis by rememberSaveable { mutableIntStateOf(2500) }

var zoomedIn by rememberSaveable { mutableStateOf(false) }

…

}In one case, a state variable is used in the logic of NoteGridViewModel. In order to make this state available to the UI, we can expose it wrapped in a LiveData object:

private val _useMidi = MutableLiveData(true)

val useMidi: LiveData<Boolean> = _useMidiHere I've made it so that only the ViewModel can actually modify useMidi so that our UI is forced to interact with the setUseMidi() function on the ViewModel in order to change the setting. Meanwhile, in our Screen, we can use observeAsState() to make sure we're recomposed when useMidi's value changes:

val useMidi by viewModel.useMidi.observeAsState()Kotlin Flows can also be observed in a similar way. This "reactive" style of programming where business logic and UI recomposition alike is triggered by events, data is processed in streams, and where function references and lambdas are used in every other line of code, can take some getting used to -- but it's worth the effort of understanding. When done poorly, an event in one part of an app can trigger a whole cascade of difficult-to-debug asynchronous processing that forms a tangle mess; but when used in a disciplined way, where immutable state flows downwards and events come back up from the UI, these tools get a lot done with very few lines of code.

Finally getting to the point

With our UI in place and a ViewModel ready to receive events, what's left is to actually output audio -- the real domain of our application. That's what I’ll cover in my next post!